TL;DR: The “ChatGPT Breach” Headlines vs. Reality

News are screaming "ChatGPT breach!" right now, but if you’re an average ChatGPT user, you can calm down (for a while). The reality is nuanced, but the lesson is critical.

- Was ChatGPT hacked? Technically, no. OpenAI’s core servers (where users’ chats and models live) weren’t impacted. The breach happened at Mixpanel, a third-party analytics vendor OpenAI used to track user behavior.

- Who should care? If you are a developer, entrepreneur, or business using the OpenAI API platform (platform.openai.com), some of your data is likely stolen.

- Who wasn’t affected? If you only use the standard ChatGPT consumer app (iOS/Android/Web) for chatting, you were untouched by this incident.

Now the part people skip: even if this OpenAI breach hit “only API users,” it’s still everyone’s problem. Why?

This OpenAI security incident is a loud alarm bell. Headlines about major hacks seem to be a weekly event nowadays. If a company with the resources of OpenAI can end up with a third-party point of failure, we should expect two things: more vendor leaks and smarter phishing campaigns targeting all users, not just developers.

If the next OpenAI data breach ever lands closer to consumer ChatGPT, you don’t want to be learning security basics that day.

That’s why we’ll collect key privacy principles at the end of this article and explain why choosing private services like Atomic Mail is the ultimate choice.

What Happened: The Vendor Attack

Modern software relies heavily on third-party integrations. OpenAI, like many tech giants, used Mixpanel for analytics to track user engagement on its API platform (platform.openai.com).

The breach was not the result of a failure in OpenAI’s own infrastructure, but rather the exploitation of access granted to this trusted partner. This was a classic supply chain attack.

The vector (smishing): According to the Mixpanel own response, the breach was caused by a social engineering campaign that targeted Mixpanel employees. The attackers probably used urgent-sounding SMS messages (e.g. fake MFA resets) to steal credentials and bypass authentication protections.

However, this does not make OpenAI innocent. In cybersecurity, we have a saying: 'You are only as secure as your vendor’s worst intern.' Therefore, OpenAI is still responsible for lost user data.

Timeline of events:

- November 9, 2025: Attackers access Mixpanel systems and export customer datasets.

- November 9–25, 2025: Forensic investigation by Mixpanel to identify affected customers.

- November 25: OpenAI is officially notified of the breach.

- November 26-27, 2025: Public disclosure and user notification.

What Information Leaked (And What Didn't)

The good news for casual ChatGPT users is that the leaked data was only concerned to users of the OpenAI API platform:

Impacted users: API accounts. This includes solo developers building apps, enterprise admins managing corporate accounts, startups and entrepreneurs automating their workflows. If you’ve ever logged into platform.openai.com, you are in the blast radius.

Not impacted: typical ChatGPT users, who only use the chat interface (chatgpt.com).

❌ What leaked

- Name provided on the API account

- Email address associated with the API account

- Approximate coarse location derived from browser (city/state/country)

- Operating system + browser used to access the API account

- Referring websites

- Organization IDs or User IDs associated with the API account

✅ What did NOT leak

- ChatGPT conversation content (prompts, outputs)

- API requests or API usage data

- Passwords or login credentials

- API keys

- Payment details

- Government IDs

What OpenAI Responded And Did

When the OpenAI data breach was exposed, the company took a "scorched earth" approach with the vendor responsible:

- Removed/terminated Mixpanel from production: OpenAI says it removed Mixpanel from production while investigating the OpenAI security incident.

- Reviewing datasets + monitoring for misuse: OpenAI says it reviewed the affected datasets and is monitoring for misuse after the OpenAI breach, especially the kind that shows up as realistic phishing, fake “account flags,” and credential-stuffing attempts.

- Expanding vendor security reviews: In a move we see after almost every security incident, the company pledged to "expand security reviews" across their entire ecosystem.

Our take: It’s a nice sentiment, but in the modern web, your data travels through dozens of third-party tools (analytics, customer support bots, billing processors) before it even hits the database. A promise to "review vendors" is like promising to catch every raindrop.

And let's not forget the recent major data breaches where the same pattern played out. Take the Discord breach, for example, when more than 70,000 government ID photos were exposed.

User Reaction: Panic, Confusion, and the “Trust Deficit”

Of course the community reacted with anger and frustration across forums and social media. That part is predictable.

What’s more interesting is why this OpenAI breach hit a nerve beyond developers.

If you zoom out, the OpenAI data breach landed in a tense moment. Just weeks ago, OpenAI rolled out a controversial Age ID Verification wave, forcing thousands of users to upload government IDs to access certain features.

Now, those same users are asking a reasonable question: “If you can’t protect email addresses from a simple analytics hack, how are you going to protect my Passport scan?”

The fallout:

- Subscription cancellations: We are seeing a spike in users cancelling ChatGPT Plus, citing "privacy fatigue."

- The realization: People are waking up to the fact that their data is the product, and currently, that product is being spilled on the floor.

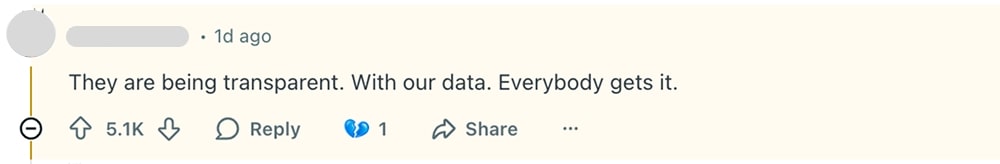

Here are some comments from a related Reddit thread showing people's opinions (names are hidden for privacy reasons):

And that’s the big lesson of this OpenAI data breach: users aren’t just assessing technical risk. They’re watching the trend line.

Impact Analysis: The Hidden Risks

The dataset exported from Mixpanel poses potential risks to OpenAI API users.

Remediation Guide

For administrators (teams, org owners, founders)

1) Strict MFA enforcement:

- Log in to platform.openai.com. Ensure MFA is enabled.

- If possible, switch from SMS MFA to a TOTP Authenticator App (Google Authenticator, Authy) or a hardware key (YubiKey). SMS is vulnerable to the very same "smishing" and SIM-swapping tactics used to breach Mixpanel.

2) Treat inbound email as an attack surface:

- Create a mental filter for any email claiming to be from OpenAI. Check the sender address. It must end in @openai.com. Be aware of email spoofing.

- Be skeptical of emails referencing your "Organization ID." Attackers now have this data and will use it to sound legitimate.

3) Rotate API keys:

Although OpenAI stated keys were not leaked, "rotate on breach" is a standard security measure. Rotate all production API keys to invalidate any theoretical exposure.

4) Audit logs:

Review the OpenAI usage logs for any unusual spikes in activity that could suggest an Organisation ID fuzzing attack.

5) Company-wide alert:

Send a memo now. Tell your team: "We will never ask for your password via email. Ignore any 'OpenAI Security' alerts that don't come from us."

For individual users

Even if you’re a regular ChatGPT user and OpenAI says you weren’t affected by this OpenAI data breach, we still recommend two boring moves that save people every day:

1) Enable MFA on your email and any OpenAI-related account you use.

2) Expect phishing. After an OpenAI breach, attackers could send emails claiming your data is compromised too, then push you to click a “secure your account” link. Don’t. Rule of thumb: never log in from an email link. Open a new tab and type the site address yourself.

3) Stop using your real email. Especially if it’s a big-tech inbox tied to years of signups, receipts, logins, and identity signals. That single address can tell a stranger more about you than you’d ever say out loud.

Instead, use a private email address and/or a dedicated email alias per service.

This isn’t just for the OpenAI security incident. It’s a general rule. We’ll unpack this right below – because email is usually the biggest privacy and security point of failure.

How to Protect Yourself Long-Term

Headlines like this appear every week. Today it's OpenAI/Mixpanel; yesterday it was Discord/5CA; tomorrow it will be another giant. Big Tech's business model relies on harvesting your data, and their security model relies on apologizing after-the-fact and promising not to let it happen again.

The first rule of protecting you data is to choose services that respect your privacy by design.

One of the biggest point of failure in every breach is your Email Address. It is the master key to everything you have online (accounts, belling receipts, 2FA fallbacks, login alerts, every “verify it’s you” moment). When you use a standard provider (like Gmail or Outlook), you are already being tracked. When that email leaks (and it usually does), it connects every dot of your life.

Here is the Atomic Mail protocol for going more private online:

Rule #1: choose services that respect privacy by design (especially email providers)

Privacy by design means:

- Collect less in the first place (because you can’t leak what you never collected)

- No forced phone numbers unless they’re truly necessary

- No ID scans for unrelated features

- Access boundaries so staff can’t casually browse your content

We built Atomic Mail with all these principles in mind from day one. To sign up, you only enter a display name, choose your address, and set a password – no phone number, no extra personal details. And we’re built around a zero‑access concept: even our team technically can’t access your encrypted emails.

Rule #2: decentralize your identity (the “alias” strategy)

Don’t give every service the same email address.

Instead, separate identities:

- one address/alias for AI tools

- one for shopping

- one for finance

- one for social

When a single service leaks (like in this OpenAI breach), attackers get one compartment, not your entire map.

At Atomic Mail you can create multiple email aliases so each signup can get its own identity lane.

Rule #3: encrypt what can be encrypted

Encryption won’t stop every security incident. But it does something more useful: it keeps the content you send or store private, so even if a breach happens, attackers can end up with unreadable ciphertext they can’t decrypt.

At Atomic Mail encryption is simple. We’re an encrypted email service built to keep advanced encryption easy to use. You can even send end-to-end encrypted emails to traditional providers via password protection – no app installs, no plugins, no setup on their or your side side.

Rule #4: minimize the data you hand out

Every time a site asks for “optional” info, imagine it printed in a future breach report.

- If a form asks for your phone number and it's not required? Leave it blank.

- If an app asks for "Contacts Access"? Deny it.

At Atomic Mail we don’t collect any personal data about you. We only may see the display name you chose at registration. That’s it.

Rule #5: harden recovery

Most account takeovers aren’t “elite hacking.” They’re recovery abuse. If an attacker controls your recovery path (your inbox, your phone number, a weak fallback) they control your account.

To avoid smishing traps, SIM swapping, and other recovery abuses, Atomic Mail offers secure seed phrase recovery, so getting your account back doesn’t hinge on a phone number or a carrier’s bad day.

That’s the protocol. Boring rules, but real results.

🔐 Sign up for Atomic Mail today on protect your identity and information.

FAQ

Was ChatGPT hacked?

Technically, no. OpenAI’s core servers were not breached. This was a supply chain attack targeting a third-party analytics vendor, Mixpanel, which OpenAI used to track usage data.

Who was affected by OpenAI data breach and what data was stolen?

Who: Primarily developers and businesses using the OpenAI API platform (platform.openai.com). What stole: Metadata including email addresses, names, User IDs, and location/device info. What is safe: Passwords, payment info, and chat history were NOT stolen.

I am a casual user and only use ChatGPT chats, was I affected?

No. The breached dataset came from the API platform used by developers. If you only use the standard ChatGPT app for personal chats, your data was not involved.

I am developer and I use ChatGPT to write code, was my code stolen?

No. Chat history and inputs were not part of the Mixpanel dataset.

Should I delete my OpenAI account?

That is a personal choice. If you stay, ensure you are using a unique, secure email address (like an Atomic Mail alias) so future breaches don't expose your primary identity.

What’s the biggest risk from the OpenAI security incident?

Phishing. The OpenAI breach data makes impersonation more believable. Expect “verify your account” messages.

Will this happen again?

As long as companies rely on complex webs of third-party vendors, yes. The only variable you can control is how much data you give them.

Why is Atomic Mail relevant here?

Because your email is the account-recovery backbone for almost everything. A private email service + aliases reduces the blast radius when the next vendor spill happens. Create your free private Atomic Mail account here.